I know what you’re thinking: another “I built something with AI” post. But hear me out. I’ve been coding with AI since ChatGPT’s early days, and as a lead developer for a SaaS company, I’ve watched these tools evolve from promising experiments to legitimate development partners. Here’s my unfiltered experience taking an app from concept to Chrome Web Store using nothing but AI assistance.

This process has been over many months of reading up and trying and failing so I am sure a lot of products I mentioned have moved on (in fact I know they have as I use them to this day). I mention them to give you an insight into my journey and the obstacles I encountered, and overcame.

The short version: AI built a production-ready application in a week (compared to my estimated month manually), but the journey from GitHub Copilot to Claude with MCP servers taught me that tool choice matters more than I ever expected.

The early days of Copilot

When GitHub Copilot launched, it promised to be the ultimate AI pair programmer. I was excited to test drive it, but reality was a different story.

Co-pilot excelled at narrow, well-defined tasks but stumbled hard on anything open-ended. Ask it to “implement standard authentication within the current framework” and you’d watch it spiral into conflicting code patterns and broken dependencies. It was like having a junior developer who could write perfect syntax but had no understanding of system architecture.

Building an app from scratch with AI

Around this time, I’d been mulling over an app concept that addressed something we all pretend doesn’t bother us: those endless terms and conditions nobody reads. You know the ones — walls of legal text that might as well be written in ancient Latin.

Initially, I dismissed the idea. The human expertise required to properly summarise legal documents seemed prohibitively expensive and slow. But then when I was able to access AI via api, I thought it might work.

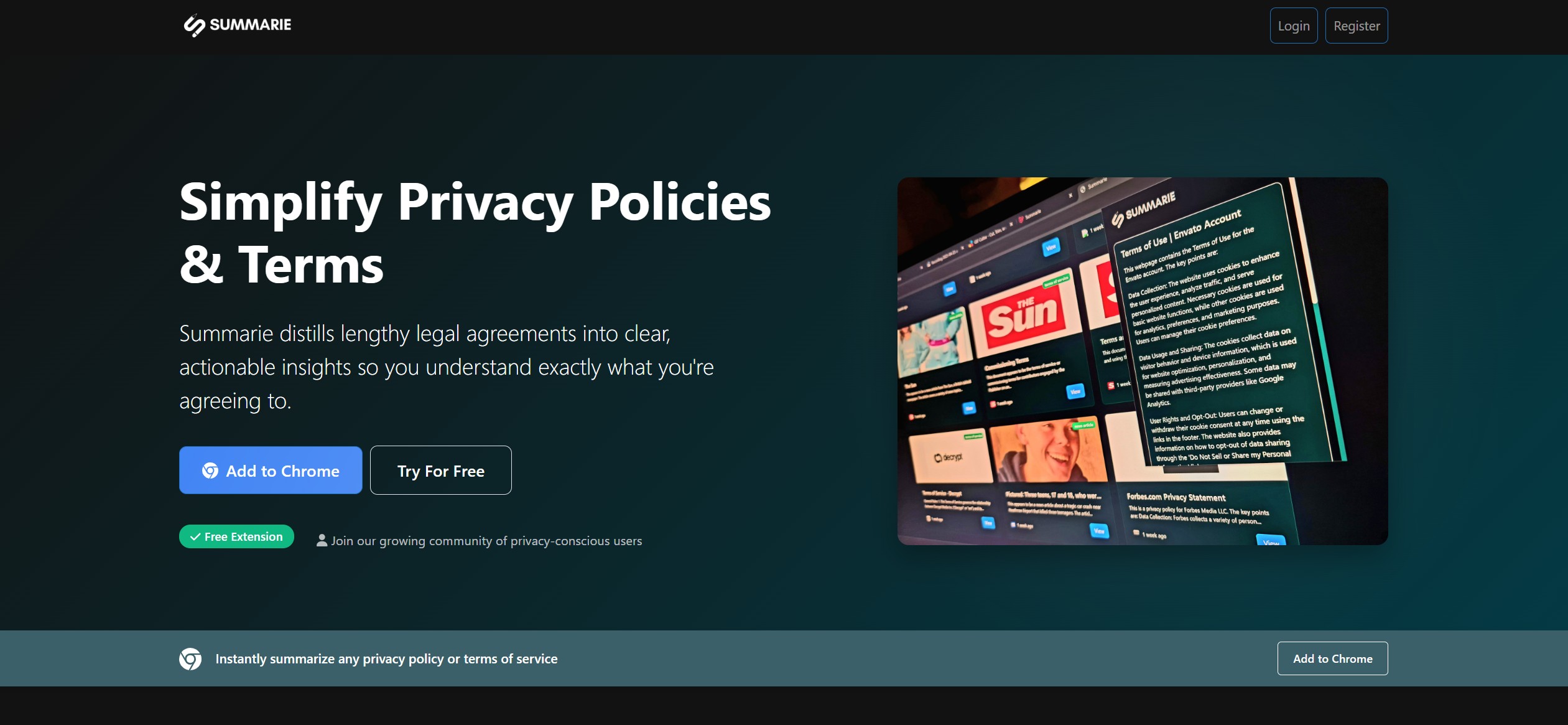

That’s how summarie.co was born. A Chrome extension that could give users instant summaries of terms and privacy policies right from their browser, with a web dashboard to track usage and previous summaries.

So I gave myself a task. To use AI to code the app without making any code changes myself. I would review the code like a lead developer in a PR and offer feedback, but I couldn’t touch the code myself.

GitHub Co-pilot wasn’t up to the task at this time, so I looked around for alternatives

Cursor’s single-file problem

I’d downloaded Cursor after hearing it had better context awareness than Co-pilot.

The plan was simple: spin up a Laravel project with Docker, add authentication scaffolding, and let Cursor handle the rest. For any Laravel developers reading this, that should be a couple of basic commands through a Docker container.

Cursor immediately hit a wall — it couldn’t execute commands through Docker. Strike one.

I ended up setting up the barebones project myself and handed over a fairly open brief: create a Chrome extension that could summarise webpage content, send it to an API endpoint, interface with an LLM service, and store results in a database.

Wrong move. Cursor politely informed me it could only work on one file at a time. For a multi-component project requiring intelligent architectural decisions across the entire codebase, this was like trying to conduct an orchestra whilst blindfolded.

Traycer.ai — the AI comedian

Another Google search led me to Traycer.ai, which marketed itself as a multi-file agent for pair programming. The VSCode extension looked promising.

This is where things got interesting. Traycer didn’t just start coding — it thought. I watched it verbalise its entire problem-solving process, complete with little self-congratulatory moments when it discovered services and framework patterns. It was oddly endearing, like watching a developer think out loud.

The real game-changer was the planning phase. Unlike Cursor, Traycer let me refine the approach before it wrote a single line of code. This felt much closer to actual pair programming.

When I gave it the green light, it delivered everything: migration files, models, controllers, Chrome extension JavaScript — the lot.

Lacking web search capabilities can really hurt development

Trusting that “AI has been trained on the whole internet,” I let it run. Big mistake.

The migrations failed spectacularly. The AI didn’t know the current date, so all migration files were timestamped as zero. Instead of the proper Laravel format like 2024_01_15_143022_create_summaries_table.php, I got files named 0000_00_00_000000_create_summaries_table.php. Since Laravel runs migrations in chronological order, the foreign key constraints threw a fit.

I fixed the timestamps manually and moved on to the frontend. More errors. My confidence evaporated as I found myself in an endless loop: fire task at AI, wait for it to “think about war and peace,” fix the obvious errors it introduced, repeat.

The token usage became a major bottleneck. Longer conversations burnt through tokens faster, leading to rate limits and cooldown periods whilst my app sat broken. Traycer would hit limits after just a few conversations, forcing me to start fresh and lose all context.

Complex codebases expose AI’s memory and consistency problems

As the codebase grew more complex, the real issues became apparent. The AI struggled with context and memory of decisions made earlier in the development process — something any developer would naturally track. When you started a new conversation, it was like talking to a completely new developer who had never seen the project before.

It was too quick to declare everything “done and working” without properly testing the work. The AI would confidently announce completion, then I’d discover broken namespaces, missing column names in models, or logic that didn’t actually work.

The work felt haphazard. It used different patterns throughout the codebase, sometimes over-abstracting simple concepts, other times creating massive functions that desperately needed breaking down. It struggled to stick to the rules I gave it, often forgetting key design decisions I’d explicitly outlined.

I got to a point where I couldn’t work out what I needed to say without it introducing more issues than it fixed.

So to the web I went to look for a new AI developer to help progress the project

Claude with MCP servers: the first tool that actually works

That’s when I discovered Claude AI with MCP (Model Context Protocol) servers. A developer post claimed it could “supercharge Claude to work through complex problems” with web search capabilities and browser interface testing.

I installed Claude, set up MCP servers, and gave it a comprehensive task: review the entire codebase, suggest refactoring opportunities, identify issues, and create an implementation roadmap.

The difference was night and day. Claude thoroughly analysed the codebase and devised intelligent solutions. With MCP servers providing computer access, it could test frontend changes via Puppeteer, run Docker commands, and check current documentation online.

The error rate dropped significantly. Claude could iterate through testing cycles, fixing sequences of bugs until features worked properly. This was genuine AI pair programming — or more accurately, “AI does most of it whilst I check in occasionally.”

Where Traycer would hit token limits in a few conversations, Claude could consume vast amounts of context in a single conversation (though it could also hit limits faster when working with large codebases).

It was a massive improvement on Trayer (sorry Traycer) and I could plan and review documentation. Its enhanced thinking meant it would review multiple different ways of completing something and present to me, or choose the most elegant solution.

Summarie ships to Chrome Web Store

Resisting the urge to micromanage, I let Claude organise the code architecture (it has a peculiar love affair with the service layer pattern). It completed the project to a fair standard and even passed Google’s Chrome Web Store verification process.

There were sometimes feedback loops with issues, but the difference was that Claude could diagnose and fix its own problems because using the MCP servers, it had access to my desktop, docker, the web and its own version of a chrome browser.

You can also set project instructions within Claude and add documentation, this I feel is Claude’s super power. It uses this to base decisions, so you can set the rules of the application here and it will refer to these rules before making any decision (most of the time)

So what does Summarie.co do?

The final application searches the DOM for terms and privacy policy links, automatically adding summary buttons to relevant pages. Users get instant summaries via the Chrome extension popup, powered by integrations with Jina.ai and Segmind for AI processing. The web dashboard lets users view previous summaries, check usage statistics, and manage credits.

Everything you see at summarie.co was designed and developed by AI with strategic prompting from me.

Claude excelled compared to others, but it still had its issues

Claude was head and shoulders above the other tools for development but unfortunately it still had its drawbacks. I kept thinking of it as a super keen puppy dog developer, who left to its own devices, would overcomplicate logic trying to cover every possible edge case, always reaching for the most comprehensive solution rather than the most elegant one. It was keen to run ahead and carry on coding rather than stop at agreed milestones for review.

Claude also had an annoying habit of creating “test scripts” whenever it couldn’t get a response from the terminal through Desktop Commander, rather than asking for help or debugging the actual issue. Nothing that I said could stop it from doing this.

And the token limitations? A proper nightmare. It would sometimes leave whole files unfinished if it hit conversation limits, with half-implemented functions and incomplete logic scattered throughout the codebase.

I learnt pretty quickly to build git commits into its workflow after it completely bricked the application as it ran out of tokens part way through the changes of the file and it hit a conversation limit so I was forced to spin up a new conversation where I would meet Claude’s clone — Super helpful developer 58 who would read the documentation and then exclaim “I have found the issue!” before spiralling off into the codebase with changes

Six essential strategies for AI development success

Through this frustrating but educational journey, here’s what actually matters when using AI coding assistants such as Claude:

Plan everything upfront. I mean everything — database schemas, flow diagrams, architectural decisions. The more detailed documentation you provide, the better chance AI has of building something coherent.

Commit often and frequently. After every significant change, commit to version control. When AI inevitably breaks something or leaves files unfinished due to token limits, you’ll thank yourself.

Don’t let it run ahead. AI is terrible at stopping at agreed milestones. It wants to keep coding and “improving” things. Stop it regularly and review what it’s actually built.

Keep reminding it about capabilities. With Claude, I constantly had to remind it that it had access to MCP servers for up-to-date information and libraries. It would default to outdated knowledge instead.

Slow down as complexity increases. As your codebase grows, you need to move slower and be more specific in your requests. Include what not to do, not just what you want.

Documentation helps, but isn’t fool proof. Documenting progress does reduce issues, but Claude can still ignore documented decisions or patterns you’ve established.

Could you build a production app with AI? Possibly. should you? Probably not.

Could you build a product with AI? Possibly — I did. Could I guarantee its stability? Absolutely not. Would I be confident scaling with this codebase? Not a chance, and this might catch a lot of vibe-coding start-ups off guard.

I don’t feel the same sense of accomplishment as a developer when using AI to write most of the code. It feels unsettling not understanding all the changes taking place, knowing there will come a time when I have to go in and fix things manually.

For prototyping, it’s a blast. You can get something working quickly to prove a concept or test market reactions. But I’d consider it a throwaway version — a proof of concept, not production code.

I imagine AI development will only get better, but I’m still not confident betting a business on it. The unpredictability and subtle bugs that emerge in complex codebases make it feel too risky for anything mission-critical.

The question isn’t whether AI will change development — it already has. It’s whether we can find the sweet spot between AI assistance and human oversight that actually produces reliable, maintainable software.

Check out Summarie at summarie.co, and read about my MCP setup process here. The app has just launched, so I’d love your feedback on this experiment.